In this post, I will share some experience of how to build a kubernetes on your server.

For this tutorial, I will create a private kubernetes cluster. You will need multiple computer or use a powerful single node computer.

Hardware

I use a single computer to launch multi-nodes environment.

- Intel Core i7 8700 6 Core, 12 Threads. (For multiple nodes.)

- 32GB RAM. (For multiple nodes.)

- 512GB SSD.

- NVIDIA RTX 2070 O8G. (optional, If you need GPU on kubernetes.)

Environment

1 x Ubuntu(host machine) as master.

4 x Centos(by Vagrant) as nodes.

@ For best environment, you can consider using some Linux distribution.

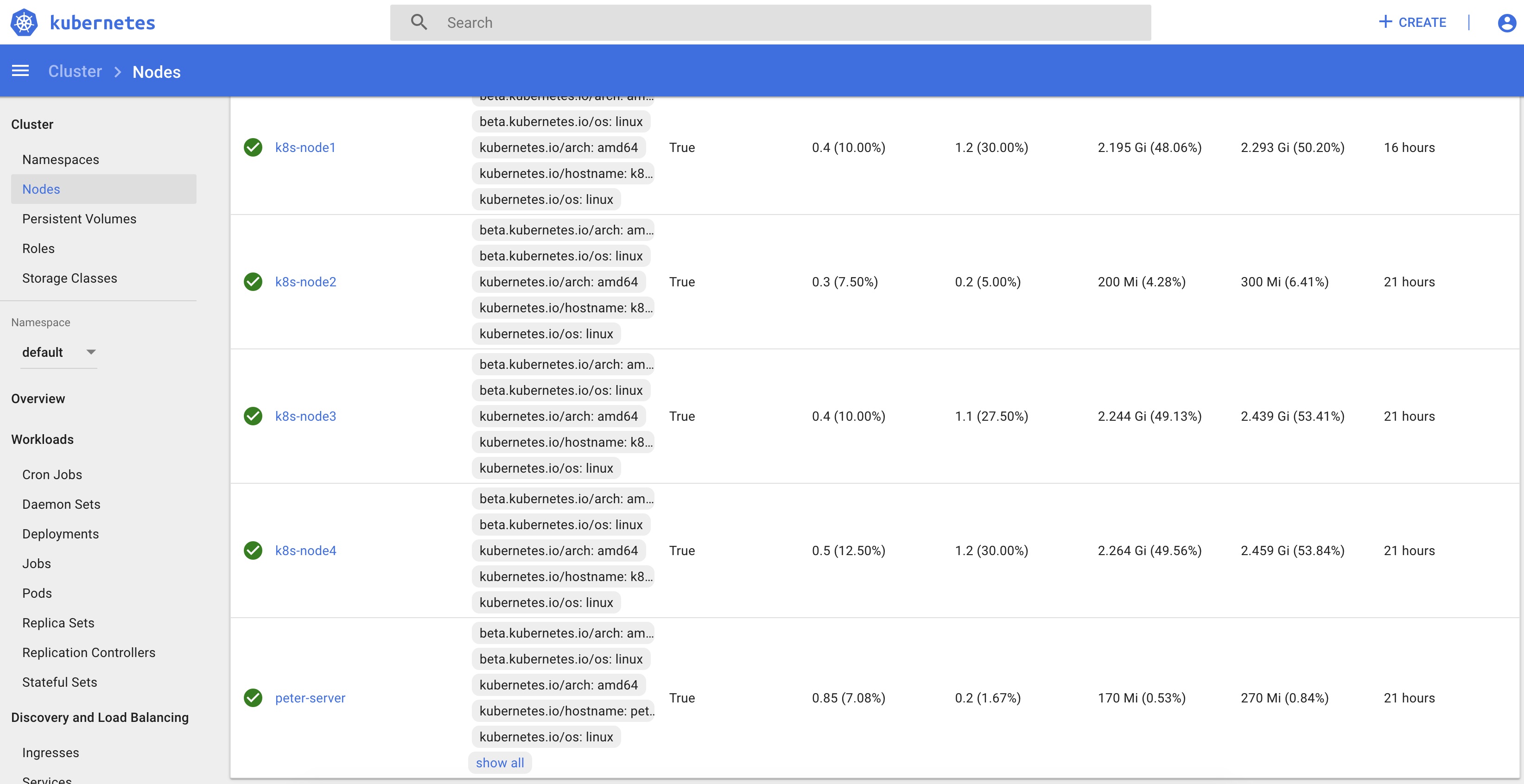

➜ ~ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-node1 Ready <none> 18h v1.15.3 192.168.2.150 <none> CentOS Linux 7 (Core) 3.10.0-957.27.2.el7.x86_64 docker://19.3.1

k8s-node2 Ready <none> 23h v1.15.3 192.168.2.151 <none> CentOS Linux 7 (Core) 3.10.0-957.27.2.el7.x86_64 docker://19.3.1

k8s-node3 Ready <none> 23h v1.15.3 192.168.2.152 <none> CentOS Linux 7 (Core) 3.10.0-957.27.2.el7.x86_64 docker://19.3.1

k8s-node4 Ready <none> 23h v1.15.3 192.168.2.153 <none> CentOS Linux 7 (Core) 3.10.0-957.27.2.el7.x86_64 docker://19.3.1

master Ready master 23h v1.15.3 192.168.2.110 <none> Ubuntu 18.04.3 LTS 5.0.0-25-generic docker://19.3.1

Environment Host machine (master node).

@ Ensure your host machine bios has turned on Virtualization Technology.

That’s all !!! for building multiple nodes on the host machine.

Setting each nodes.

Vagrantfile for nodes.

# save this file as Vagrantfile

Vagrant.configure(2) do |config|

config.vm.box = "centos/7"

config.vm.synced_folder '.', '/vagrant', disabled: true

# change IP to your local ip address

config.vm.network "public_network", ip: "192.168.2.150",bridge: "eth1", :mac => "8CA3ABCE4001"

# change hostname to k8s-node1, k8s-node2....

config.vm.hostname = "k8s-node1"

config.vm.provision "shell",

inline: "sudo iptables -P FORWARD ACCEPT"

# load prepare_k8s.sh script

config.vm.provision "shell", path: "./../prepare_k8s.sh", keep_color: true

config.vm.provider :virtualbox do |virtualbox, override|

# change name, memory, cpu

virtualbox.name = "node1"

virtualbox.memory = 5000

virtualbox.cpus = 4

end

end

You should change the config that based on your hardware resources.

prepare_k8s.sh

#! /bin/sh

# let nodes has google DNS server, otherwise sometimes nodes DNS will fail.

sudo cat <<EOF > /etc/resolver.conf

nameserver 8.8.8.8

EOF

# update and install the latest docker.

sudo yum update -y

sudo yum install -y vim yum-utils device-mapper-persistent-data lvm2 net-tools

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo -y

sudo yum install -y docker-ce docker-ce-cli containerd.io

sudo systemctl start docker.service

sudo systemctl enable docker.service

# install kubernetes

sudo cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kube*

EOF

# set SELINUX enforcing -> permissive

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

sudo sed -i 's/^GRUB_DEFAULT=saved$/GRUB_DEFAULT=0/' /etc/default/grub

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

sudo systemctl enable --now kubelet

sudo cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

# important, turn off swap.

sudo swapoff -a

Directory config

For vagrant, I suggest that you have this find folder structure.

kubernetes/node1/Vagrantfile

kubernetes/node2/Vagrantfile

kubernetes/node3/Vagrantfile

kubernetes/node4/Vagrantfile

kubernetes/prepare_k8s.sh

Let’s bootup each node.

# cd node1, node2, node3, node4

$ sudo vagrant up

......some bootup message......

# ssh login to node1

$ sudo vagrant ssh

# ensure the swap is disable.

[vagrant@k8s-node1 ~]$ cat /etc/fstab

#

# /etc/fstab

UUID=8ac075e3-1124-4bb6-bef7-xxxxxx / xfs defaults 0 0

#/swapfile none swap defaults 0 0

If swap is not disable, turn off swap by sudo swapoff -a and modify /etc/fstab

Do these step at each node and master. (node1-node4, master) .

And check your IP address of each node.

# ssh to each node

$ ifconfig eth1

You may has this kind of IP address. Ensure all of nodes and master are in same IP subnet.

master: 192.168.2.110

node1: 192.168.2.150

node2: 192.168.2.151

node3: 192.168.2.152

node4: 192.168.2.153

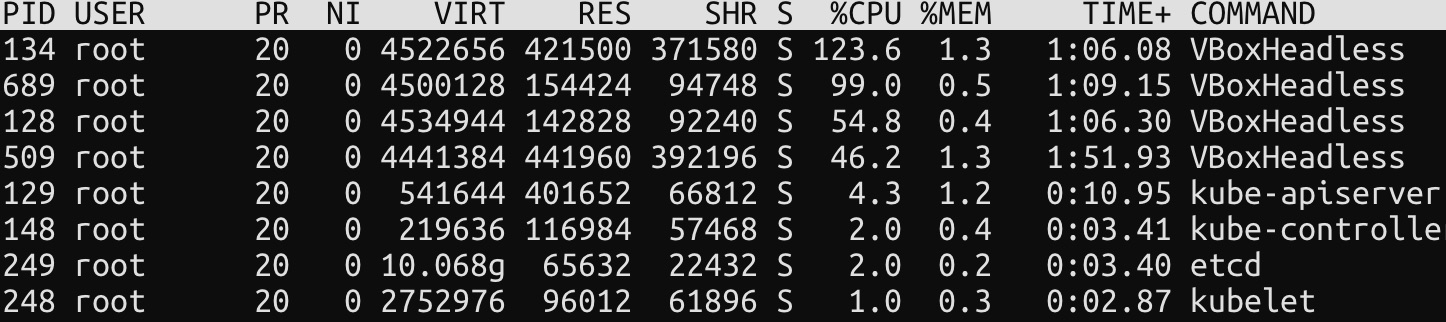

At the master node, you can use $ top. You should see 4 VBoxHeadless is running.

Let’s init master node

Our master is Ubuntu, we use this script.

# Ubuntu, Debian

apt-get update && apt-get install -y apt-transport-https curl

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF

apt-get update

apt-get install -y kubelet kubeadm kubectl

apt-mark hold kubelet kubeadm kubectl

If your master’s package manager is yum, use this script.

# Centos

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

# Set SELinux in permissive mode (effectively disabling it)

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable --now kubelet

kubeadm installation reference

@ node already install kubeadm and kubelet in prepare_k8s.sh. So we don’t need to do it on each node.

Init kubeadm at the master node.

kubeadm init

$ sudo kubeadm init --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: vX.Y.Z

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

.........

kubeadm join --token <token> <master-ip>:<master-port> --discovery-token-ca-cert-hash sha256:<hash>

# you should get this kubeadm join information.

Setting kubectl to your kubernetes cluster.

Make your kubectl can access your master node.

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

Currently, you cluster has only master node.

Let’s join other nodes to your cluster.

# use `sudo vagrant ssh` to login your each nodes.

$ sudo kubeadm join 192.168.2.xxxx:6443 --token xxxxxxxxx --discovery-token-ca-cert-hash sha256:xxxxxxxxxxxxx

add –node-ip=IP_ADDRESS to kubeadm-flags.env. IP_ADDRESS is your node’s ip. Remeber to have same subnet to your ethernet.

$ sudo vim /var/lib/kubelet/kubeadm-flags.env

KUBELET_KUBEADM_ARGS="--cgroup-driver=cgroupfs --network-plugin=cni --pod-infra-container-image=k8s.gcr.io/pause:3.1 --node-ip=192.168.2.150"

# restart kubelet

$ sudo systemctl restart kubelet

To these steps on each node.

Pod Network add-on

Please also check kubeadm docs for more details details

We will use flannel.

WARNING

@ Also, beware, that your Pod network must not overlap with any of the host networks as this can cause issues. If you find a collision between your network plugin’s preferred Pod network and some of your host networks, you should think of a suitable CIDR replacement and use that during kubeadm init with –pod-network-cidr and as a replacement in your network plugin’s YAML.

If you have existed network subnet same as –pod-network-cidr, you should change it.

Apply network add-on to your cluster.

$ kubectl apply -f <add-on.yaml>

For flannel to work correctly, you must pass –pod-network-cidr=10.244.0.0/16 to kubeadm init.

Set /proc/sys/net/bridge/bridge-nf-call-iptables to 1 by running sysctl net.bridge.bridge-nf-call-iptables=1 to pass bridged IPv4 traffic to iptables’ chains. This is a requirement for some CNI plugins to work.

(We already did this on prepare_k8s.sh)

Note that flannel works on amd64, arm, arm64, ppc64le and s390x under Linux. Windows (amd64) is claimed as supported in v0.11.0 but the usage is undocumented.

# apply flannel to your cluster.

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/62e44c867a2846fefb68bd5f178daf4da3095ccb/Documentation/kube-flannel.yml

So, why we need to init cidr to 10.244.0.0/16.

# at kube-flannel.yml we can see this network config.

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

If we want to use different cidr network. you should use sudo kubeadm init --pod-network-cidr=10.32.0.0/16 and changed flannel Network to 10.32.0.0/16

After your apply flannel to your cluster. You can check network interface on your node1-node4.

you will get two new interfaces flannel.1 and cni0.

# I use CIDR 10.32.0.0/16. ifconfig will display 10.32.x.x

[vagrant@k8s-node1 ~]$ ifconfig flannel.1

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.32.4.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::24ae:5eff:xxxx:xxxx prefixlen 64 scopeid 0x20<link>

ether 26:ae:5e:29:57:d8 txqueuelen 0 (Ethernet)

RX packets 994894 bytes 1042168344 (993.8 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 361101 bytes 400393922 (381.8 MiB)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

[vagrant@k8s-node1 ~]$ ifconfig cni0

cni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.32.4.1 netmask 255.255.255.0 broadcast 0.0.0.0

inet6 fe80::1876:f4ff:xxxx:xxxx prefixlen 64 scopeid 0x20<link>

ether 1a:76:f4:0d:6c:5a txqueuelen 1000 (Ethernet)

RX packets 460962 bytes 353293465 (336.9 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 478311 bytes 1050286009 (1001.6 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Let’s see network interfaces on other node.

[vagrant@k8s-node2 ~]$ ifconfig cni0

cni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.32.3.1 netmask 255.255.255.0 broadcast 0.0.0.0

inet6 fe80::c468:e0ff:xxxx:xxxx prefixlen 64 scopeid 0x20<link>

ether c6:68:e0:40:12:31 txqueuelen 1000 (Ethernet)

RX packets 592707 bytes 157355303 (150.0 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 522413 bytes 655781024 (625.4 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[vagrant@k8s-node2 ~]$ ifconfig flannel.1

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.32.3.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::c003:63ff:xxxx:xxxx prefixlen 64 scopeid 0x20<link>

ether c2:03:63:59:12:d1 txqueuelen 0 (Ethernet)

RX packets 291008 bytes 166468118 (158.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 300398 bytes 140955741 (134.4 MiB)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

Test your cluster is ready or unready.

# at master node

$ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-node1 Ready <none> 18h v1.15.3 192.168.2.150 <none> CentOS Linux 7 (Core) 3.10.0-957.27.2.el7.x86_64 docker://19.3.1

k8s-node2 Ready <none> 23h v1.15.3 192.168.2.151 <none> CentOS Linux 7 (Core) 3.10.0-957.27.2.el7.x86_64 docker://19.3.1

k8s-node3 Ready <none> 23h v1.15.3 192.168.2.152 <none> CentOS Linux 7 (Core) 3.10.0-957.27.2.el7.x86_64 docker://19.3.1

k8s-node4 Ready <none> 23h v1.15.3 192.168.2.153 <none> CentOS Linux 7 (Core) 3.10.0-957.27.2.el7.x86_64 docker://19.3.1

master Ready master 23h v1.15.3 192.168.2.110 <none> Ubuntu 18.04.3 LTS 5.0.0-25-generic docker://19.3.1

Status of all nodes and master should be Ready.

Congrats, you have a kubernetes cluster.

But you also need to set up NFS storage. Otherwise, your container can’t keep data persistently. please check my another article.

If you have some problem on network, you may try to clean iptables.

sudo iptables -F && sudo iptables -t nat -F && sudo iptables -t mangle -F && sudo iptables -X

Dev

Dev